Chinese startup, 01.AI, has launched their first proprietary model, Yi-Large this week, and is comparable to the performance of GPT-4.

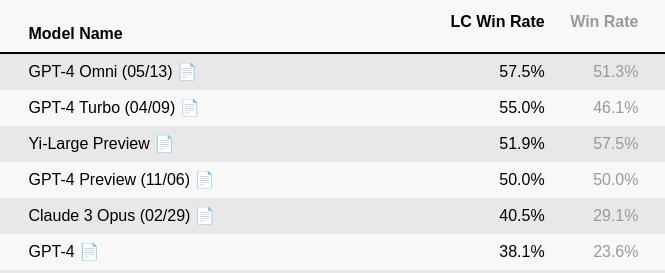

The AlpacaEval Leaderboard currently has the model ranked third.

https://tatsu-lab.github.io/alpaca_eval

The model is available with sign up through their API and offer Yi-Large and Yi-Large-RAG.

Yi-1.5 is an upgraded version with stronger performance in coding, math, reasoning and instruction following. There are versions of their Chat and Base Models with context lengths of 4K, 16K and 32K. It is a continuously pre-trained version and released with the Apache License 2.0.

Their current family of models includes:

- Yi-1.5 6B/9B/34B Chat and Base Model (May 2024)

- Yi-VL-6B/34B Multimodal Version (January 2024)

- Yi-6B/9B/34B-Chat Finetuned Models and Quantized Versions (November 2023)

- Yi-6B/9B/34B Base Model (November 2023)

Yi-6B and Yi-34B were pre-trained with 3.1T tokens of English and Chinese corpora.

Yi-9B is an extension of Yi-6B, with boosted performance in coding and mathematics. Released March 2024, it has a 200K context window.

In November 2023, 01.AI introduced their open-source large language model called Yi-34B. It is a bilingual model, supporting both English and Chinese. It has a context window of up to 200K. It is available in different sizes, 6B, 9B and 34B. The license permits commercial use.

In January 2024 they launched a multi-modal model called Yi-VL-34B that can process images.

In an interview with Bloomberg Founder and CEO Kai-Fu Lee is expecting significant revenue for 2024. Approximately three quarters of their fundraising is invested into buying GPUs. Lee was formerly president of Google’s search business in China before leaving in 2009.

They hope to capitalise on the next wave of AI-first applications and AI-empowered business models.

Yi-1.5-34B-Chat is available on HuggingChat.